背景

在我之前的文章 K8S 生态周报| Google 选择 Cilium 作为 GKE 下一代数据面 一文中,我介绍了 Google 宣布使用 Cilium 作为 GKE 的下一代数据面,及其背后的故事。

Google 选择 Cilium 主要是为了增加 GKE 平台的容器安全性和可观测性。那么,Cilium 到底是什么,为什么会有这么强的吸引力呢?

摘一段官网的介绍:

Cilium is open source software for transparently securing the network connectivity between application services deployed using Linux container management platforms like Docker and Kubernetes.

Cilium 是一个用于透明保护部署在 Linux 容器管理平台(比如 Docker 和 Kubernetes)上的应用服务之间网络连接的开源软件。

为什么着重强调是 “Linux 容器管理平台” 呢?这就不得不提到 Cilium 的实现了。Cilium 的基础是一种称为 eBPF 的 Linux 内核技术,使用 eBPF 可以在 Linux 自身内部动态的插入一些控制逻辑,从而满足可观察性和安全性相关的需求。

只谈概念毕竟过于空洞,本节我们直接上手实践一下 Cilium 。

准备集群

这里我使用 KIND 来创建一套多节点的本地集群。

写配置文件

在创建集群时候,通过配置文件来禁用掉 KIND 默认的 CNI 插件。

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

- role: worker

networking:

disableDefaultCNI: true

启动集群

将配置文件命名为 kindconfig ,通过 --config 参数来指定它。 通过 --image 参数可指定创建集群所使用的镜像,这里我使用 kindest/node:v1.19.0@sha256:6a6e4d588db3c2873652f382465eeadc2644562a64659a1da4 来创建一个最新的 Kubernetes v1.19.0 版本的集群。

(MoeLove) ➜ ~ kind create cluster --config=kindconfig --image=kindest/node:v1.19.0@sha256:6a6e4d588db3c2873652f382465eeadc2644562a64659a1da4

db73d3beaa8848

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.19.0) 🖼

✓ Preparing nodes 📦 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

查看状态

由于我们已经禁用了 KIND 默认的 CNI ,所以现在集群的 Node 都是 NotReady 的状态,等待 CNI 的初始化。

(MoeLove) ➜ ~ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kind-control-plane NotReady master 85s v1.19.0

kind-worker NotReady <none> 49s v1.19.0

kind-worker2 NotReady <none> 49s v1.19.0

kind-worker3 NotReady <none> 49s v1.19.0

部署 Cilium

部署 Cilium 可以有多种方式,这里我们选择最简单的,直接使用 Helm 3 进行部署。

添加 Helm 仓库

Cilium 提供了官方维护的 Helm 仓库,我们先来添加它。

注意: 请使用 Helm 3。 在之前的文章 K8S 生态周报| Helm v2 进入维护期倒计时 中,我曾介绍过 Helm v2 的维护期已经进入倒计时,三个月后将停止为 Helm v2 提供安全补丁,届时 Helm v2 的维护期就彻底终止了。

(MoeLove) ➜ ~ helm repo add cilium https://helm.cilium.io/

"cilium" has been added to your repositories

预加载镜像

这一步并非必须。 只是由于每个在 Node 上都需要下载 cilium/cilium:v1.8.2 的镜像,会很耗时,所以我们可以直接使用 kind load docker-image 将主机 Docker 中的镜像加载到 KIND 创建的集群中。

# 下载镜像

(MoeLove) ➜ ~ docker pull cilium/cilium:v1.8.2

v1.8.2: Pulling from cilium/cilium

Digest: sha256:9dffe79408025f7523a94a1828ac1691b997a2b1dbd69af338cfbecc8428d326

Status: Image is up to date for cilium/cilium:v1.8.2

docker.io/cilium/cilium:v1.8.2

# 将镜像加载到 KIND 集群中

(MoeLove) ➜ ~ kind load docker-image cilium/cilium:v1.8.2

Image: "cilium/cilium:v1.8.2" with ID "sha256:009715be68951ab107617f04dc50bcceb3d3f1e0c09db156aacf95e56eb0d5cc" not yet present on node "kind-worker3", loading...

Image: "cilium/cilium:v1.8.2" with ID "sha256:009715be68951ab107617f04dc50bcceb3d3f1e0c09db156aacf95e56eb0d5cc" not yet present on node "kind-control-plane", loading...

Image: "cilium/cilium:v1.8.2" with ID "sha256:009715be68951ab107617f04dc50bcceb3d3f1e0c09db156aacf95e56eb0d5cc" not yet present on node "kind-worker", loading...

Image: "cilium/cilium:v1.8.2" with ID "sha256:009715be68951ab107617f04dc50bcceb3d3f1e0c09db156aacf95e56eb0d5cc" not yet present on node "kind-worker2", loading...

镜像加载完成后,可使用如下命令进行二次确认:

for i in `docker ps --filter label=io.x-k8s.kind.cluster=kind -q`

do

docker exec $i ctr -n k8s.io -a /run/containerd/containerd.sock i ls |grep cilium

done

使用 Helm 部署 Cilium

(MoeLove) ➜ ~ helm install cilium cilium/cilium --version 1.8.2 \

--namespace kube-system \

--set global.nodeinit.enabled=true \

--set global.kubeProxyReplacement=partial \

--set global.hostServices.enabled=false \

--set global.externalIPs.enabled=true \

--set global.nodePort.enabled=true \

--set global.hostPort.enabled=true \

--set global.pullPolicy=IfNotPresent \

--set config.ipam=kubernetes \

--set global.hubble.enabled=true \

--set global.hubble.relay.enabled=true \

--set global.hubble.ui.enabled=true \

--set global.hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,http}"

NAME: cilium

LAST DEPLOYED: Wed Sep 2 21:03:23 2020

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.8.2.

For any further help, visit https://docs.cilium.io/en/v1.8/gettinghelp

这里对几个配置项做下说明:

global.hubble.enabled=true: 表示启用 Hubble 。global.hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,http}:表示 Hubble 暴露出的 metrics 中包含哪些内容,如果不指定则表示禁用它。global.hubble.ui.enabled=true:表示启用 Hubble UI

对于 Hubble 是什么,我们稍后再介绍。

当 Cilium 部署完成后,我们可以查看下部署的 ns 下的 Pod 情况:

(MoeLove) ➜ ~ kubectl -n kube-system get pods

NAME READY STATUS RESTARTS AGE

cilium-86dbc 1/1 Running 0 2m11s

cilium-cjcps 1/1 Running 0 2m11s

cilium-f8dtm 1/1 Running 0 2m11s

cilium-node-init-9r9cm 1/1 Running 1 2m11s

cilium-node-init-bkg28 1/1 Running 1 2m11s

cilium-node-init-jgx6r 1/1 Running 1 2m11s

cilium-node-init-s7xhx 1/1 Running 1 2m11s

cilium-operator-756cc96896-brlrh 1/1 Running 0 2m11s

cilium-t8kqc 1/1 Running 0 2m11s

coredns-f9fd979d6-7vfnq 1/1 Running 0 6m16s

coredns-f9fd979d6-h7rfw 1/1 Running 0 6m16s

etcd-kind-control-plane 1/1 Running 0 6m19s

hubble-relay-666ddfd69b-2lpsz 1/1 Running 0 2m11s

hubble-ui-7854cf65dc-ncj89 1/1 Running 0 2m11s

kube-apiserver-kind-control-plane 1/1 Running 0 6m19s

kube-controller-manager-kind-control-plane 1/1 Running 0 6m19s

kube-proxy-48rwk 1/1 Running 0 6m16s

kube-proxy-8mn58 1/1 Running 0 5m59s

kube-proxy-jptln 1/1 Running 0 5m59s

kube-proxy-pp24h 1/1 Running 0 5m59s

kube-scheduler-kind-control-plane 1/1 Running 0 6m19s

查看 Node 的状态:

(MoeLove) ➜ ~ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kind-control-plane Ready master 7m1s v1.19.0

kind-worker Ready <none> 6m26s v1.19.0

kind-worker2 Ready <none> 6m26s v1.19.0

kind-worker3 Ready <none> 6m26s v1.19.0

Cilium 功能体验

Hubble 介绍

上文中,通过 Helm 部署 Cilium 时,我们指定了一些与 Hubble 有关的参数,但尚未介绍 Hubble 具体是什么。这里简单介绍下。

Hubble 是一个完全分布式的网络和安全性的可观察性平台,它建立在 Cilium 和 eBPF 之上,以完全透明的方式深入了解服务以及网络基础结构的通信和行为。

由于它是构建在 Cilium 之上的,Hubble 可以利用 eBPF 获得可见性。通过使用 eBPF ,所有可见性都是可编程的,并且可以最大程度的减少开销,同时根据用户需要提供深入和详尽的可见性。例如:

- 了解服务之间的依赖关系。可以观测到服务之间是否有通信,通信频率,以及 HTTP 调用产生的状态码等;

- 监控网络和告警。可以观测到网络连接是否异常,是 L4 还是 L7 有问题,DNS 查询是否异常等;

- 监控应用程序。可以观测到 HTTP 4xx/5xx 的错误率,HTTP 请求和响应的 95 值,99值等;

- 监控安全问题。可以观测到哪些请求是被 Network Policy 所拒绝的,哪些服务解析了特定的域名等;

可观察性

我们可以直接使用 hubble observe 观测当前集群中的连接情况:

(MoeLove) ➜ hubble-ui git:(master) kubectl exec -n kube-system -t ds/cilium -- hubble observe

TIMESTAMP SOURCE DESTINATION TYPE VERDICT SUMMARY

Sep 2 07:06:41.624 kube-system/coredns-f9fd979d6-h7rfw:8181 10.244.1.50:52404 to-stack FORWARDED TCP Flags: ACK, FIN

Sep 2 07:06:41.625 10.244.1.50:52404 kube-system/coredns-f9fd979d6-h7rfw:8181 to-endpoint FORWARDED TCP Flags: ACK, FIN

Sep 2 07:06:42.376 10.244.1.12:4240 10.244.0.76:45164 to-overlay FORWARDED TCP Flags: ACK

Sep 2 07:06:42.376 10.244.0.76:45164 10.244.1.12:4240 to-endpoint FORWARDED TCP Flags: ACK

Sep 2 07:06:42.778 10.244.1.50:37512 10.244.1.12:4240 to-endpoint FORWARDED TCP Flags: ACK, PSH

Sep 2 07:06:42.778 10.244.1.12:4240 10.244.1.50:37512 to-stack FORWARDED TCP Flags: ACK, PSH

Sep 2 07:06:44.941 10.244.1.50:59870 10.244.0.108:4240 to-overlay FORWARDED TCP Flags: ACK

Sep 2 07:06:44.941 10.244.1.12:4240 10.244.2.220:47616 to-overlay FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:44.941 10.244.1.50:52090 10.244.3.159:4240 to-overlay FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:44.941 10.244.1.50:52958 10.244.2.81:4240 to-overlay FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:44.941 10.244.2.220:47616 10.244.1.12:4240 to-endpoint FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:45.448 10.244.1.12:4240 10.244.3.111:54012 to-overlay FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:45.449 10.244.3.111:54012 10.244.1.12:4240 to-endpoint FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:47.631 kube-system/coredns-f9fd979d6-h7rfw:36120 172.18.0.4:6443 to-stack FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:47.822 10.244.1.50:60914 kube-system/coredns-f9fd979d6-h7rfw:8080 to-endpoint FORWARDED TCP Fla

gs: SYN

Sep 2 07:06:47.822 kube-system/coredns-f9fd979d6-h7rfw:8080 10.244.1.50:60914 to-stack FORWARDED TCP Fla

gs: SYN, ACK

Sep 2 07:06:47.822 10.244.1.50:60914 kube-system/coredns-f9fd979d6-h7rfw:8080 to-endpoint FORWARDED TCP Fla

gs: ACK

Sep 2 07:06:47.823 kube-system/coredns-f9fd979d6-h7rfw:8080 10.244.1.50:60914 to-stack FORWARDED TCP Fla

gs: ACK, PSH

Sep 2 07:06:47.823 kube-system/coredns-f9fd979d6-h7rfw:8080 10.244.1.50:60914 to-stack FORWARDED TCP Fla

gs: ACK, FIN

Sep 2 07:06:47.823 10.244.1.50:60914 kube-system/coredns-f9fd979d6-h7rfw:8080 to-endpoint FORWARDED TCP Fla

gs: ACK, PSH

可以看到内容很详细,包括通信的两端,以及发的包是 ACK 还是 SYN 等信息均可观测到。

部署测试应用

这里我们部署一个测试应用来实际体验下 Cilium 提供的强大功能。官方仓库中提供了一个 connectivity-check 的测试用例,这里我对它做了精简和修改,以便理解。

这里定义的内容如下:

- 1 个名为

echo-a的 svc ,用于暴露echo-a这个测试服务; - 4 个 deploy ,分别是 1 个测试服务,以及三个用于测试与

echo-a联通性的 deploy; - 2 个 CiliumNetworkPolicy,用来控制是否允许与

echo-a联通;

---

apiVersion: v1

kind: Service

metadata:

name: echo-a

spec:

type: ClusterIP

ports:

- port: 80

selector:

name: echo-a

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-a

spec:

selector:

matchLabels:

name: echo-a

replicas: 1

template:

metadata:

labels:

name: echo-a

spec:

containers:

- name: echo-container

image: docker.io/cilium/json-mock:1.0

imagePullPolicy: IfNotPresent

readinessProbe:

exec:

command: ["curl", "-sS", "--fail", "-o", "/dev/null", "localhost"]

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pod-to-a-allowed-cnp

spec:

selector:

matchLabels:

name: pod-to-a-allowed-cnp

replicas: 1

template:

metadata:

labels:

name: pod-to-a-allowed-cnp

spec:

containers:

- name: pod-to-a-allowed-cnp-container

image: docker.io/byrnedo/alpine-curl:0.1.8

command: ["/bin/ash", "-c", "sleep 1000000000"]

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command: ["curl", "-sS", "--fail", "-o", "/dev/null", "echo-a"]

readinessProbe:

exec:

command: ["curl", "-sS", "--fail", "-o", "/dev/null", "echo-a"]

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "pod-to-a-allowed-cnp"

spec:

endpointSelector:

matchLabels:

name: pod-to-a-allowed-cnp

egress:

- toEndpoints:

- matchLabels:

name: echo-a

toPorts:

- ports:

- port: "80"

protocol: TCP

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: kube-system

k8s:k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pod-to-a-l3-denied-cnp

spec:

selector:

matchLabels:

name: pod-to-a-l3-denied-cnp

replicas: 1

template:

metadata:

labels:

name: pod-to-a-l3-denied-cnp

spec:

containers:

- name: pod-to-a-l3-denied-cnp-container

image: docker.io/byrnedo/alpine-curl:0.1.8

command: ["/bin/ash", "-c", "sleep 1000000000"]

imagePullPolicy: IfNotPresent

livenessProbe:

timeoutSeconds: 7

exec:

command: ["ash", "-c", "! curl -sS --fail --connect-timeout 5 -o /dev/null echo-a"]

readinessProbe:

timeoutSeconds: 7

exec:

command: ["ash", "-c", "! curl -sS --fail --connect-timeout 5 -o /dev/null echo-a"]

---

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "pod-to-a-l3-denied-cnp"

spec:

endpointSelector:

matchLabels:

name: pod-to-a-l3-denied-cnp

egress:

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: kube-system

k8s:k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: UDP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pod-to-a

spec:

selector:

matchLabels:

name: pod-to-a

replicas: 1

template:

metadata:

labels:

name: pod-to-a

spec:

containers:

- name: pod-to-a-container

image: docker.io/byrnedo/alpine-curl:0.1.8

command: ["/bin/ash", "-c", "sleep 1000000000"]

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command: ["curl", "-sS", "--fail", "-o", "/dev/null", "echo-a"]

直接部署即可:

(MoeLove) ➜ ~ kubectl apply -f cilium-demo.yaml

service/echo-a created

deployment.apps/echo-a created

deployment.apps/pod-to-a-allowed-cnp created

ciliumnetworkpolicy.cilium.io/pod-to-a-allowed-cnp created

deployment.apps/pod-to-a-l3-denied-cnp created

ciliumnetworkpolicy.cilium.io/pod-to-a-l3-denied-cnp created

deployment.apps/pod-to-a created

查看 Pod 状态,看看状态是否正常:

(MoeLove) ➜ ~ kubectl get pods

NAME READY STATUS RESTARTS AGE

echo-a-8b6595b89-w9kt2 1/1 Running 0 49s

pod-to-a-5567c85856-xsg5b 1/1 Running 0 49s

pod-to-a-allowed-cnp-7b85c8db8-jrjhx 1/1 Running 0 49s

pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm 1/1 Running 0 49s

命令行观测

接下来,使用 hubble observe 观察下效果,已经可以看到我们部署的应用产生的连接了。

(MoeLove) ➜ ~ kubectl exec -n kube-system -t ds/cilium -- hubble observe

TIMESTAMP SOURCE DESTINATION TYPE VERDICT SUMMARY

Sep 3 00:00:13.481 default/pod-to-a-5567c85856-xsg5b:60784 default/echo-a-8b6595b89-w9kt2:80 to-endpoint FORWARDED TCP Flags: ACK, PSH

Sep 3 00:00:15.429 kube-system/coredns-f9fd979d6-h7rfw:53 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:43696 to-endpoint FORWARDED UDP

Sep 3 00:00:16.010 10.244.1.12:4240 10.244.2.220:50830 to-overlay FORWARDED TCP Flags: ACK

Sep 3 00:00:16.010 10.244.1.12:4240 10.244.1.50:40402 to-stack FORWARDED TCP Flags: ACK

Sep 3 00:00:16.010 10.244.1.50:40402 10.244.1.12:4240 to-endpoint FORWARDED TCP Flags: ACK

Sep 3 00:00:16.011 10.244.2.220:50830 10.244.1.12:4240 to-endpoint FORWARDED TCP Flags: ACK

Sep 3 00:00:16.523 10.244.1.12:4240 10.244.3.111:57242 to-overlay FORWARDED TCP Flags: ACK

Sep 3 00:00:16.523 10.244.3.111:57242 10.244.1.12:4240 to-endpoint FORWARDED TCP Flags: ACK

Sep 3 00:00:21.376 kube-system/coredns-f9fd979d6-h7rfw:53 default/pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm:44785 to-overlay FORWARDED UDP

Sep 3 00:00:21.377 kube-system/coredns-f9fd979d6-h7rfw:53 default/pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm:44785 to-overlay FORWARDED UDP

Sep 3 00:00:23.896 kube-system/coredns-f9fd979d6-h7rfw:36120 172.18.0.4:6443 to-stack FORWARDED TCP Flags: ACK

Sep 3 00:00:25.428 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 default/echo-a-8b6595b89-w9kt2:80 L3-L4 FORWARDED TCP Flags: SYN

Sep 3 00:00:25.428 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 default/echo-a-8b6595b89-w9kt2:80 to-endpoint FORWARDED TCP Flags: SYN

Sep 3 00:00:25.428 default/echo-a-8b6595b89-w9kt2:80 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 to-endpoint FORWARDED TCP Flags: SYN, ACK

Sep 3 00:00:25.428 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 default/echo-a-8b6595b89-w9kt2:80 to-endpoint FORWARDED TCP Flags: ACK

Sep 3 00:00:25.428 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 default/echo-a-8b6595b89-w9kt2:80 to-endpoint FORWARDED TCP Flags: ACK, PSH

Sep 3 00:00:25.429 default/pod-to-a-allowed-cnp-7b85c8db8-jrjhx:55678 default/echo-a-8b6595b89-w9kt2:80 to-endpoint FORWARDED TCP Flags: ACK, FIN

Sep 3 00:00:29.546 10.244.1.50:57770 kube-system/coredns-f9fd979d6-h7rfw:8080 to-endpoint FORWARDED TCP Flags: SYN

Sep 3 00:00:29.546 kube-system/coredns-f9fd979d6-h7rfw:8080 10.244.1.50:57770 to-stack FORWARDED TCP Flags: SYN, ACK

Sep 3 00:00:29.546 10.244.1.50:57770 kube-system/coredns-f9fd979d6-h7rfw:8080 to-endpoint FORWARDED TCP Flags: ACK

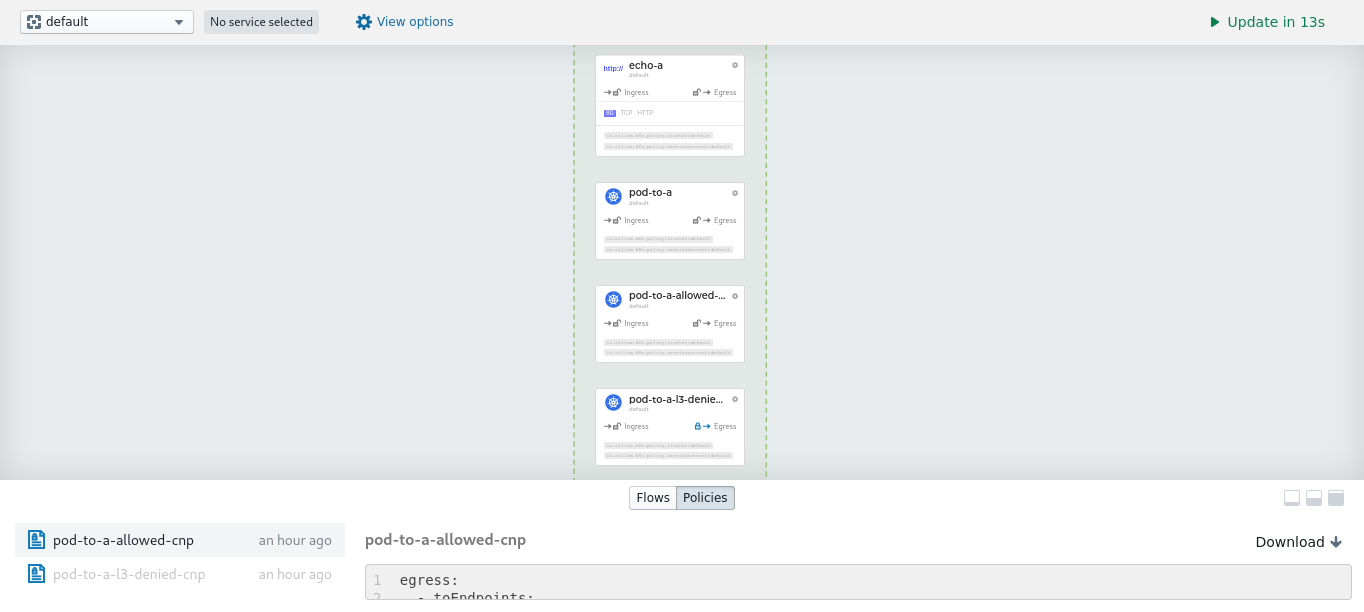

Hubble UI 观测

还记得我们在上文中部署 Cilium 时候配置的几个关于 Hubble 的参数么,现在我们可以使用 Hubble UI 来看看效果。

先检查下 kube-system ns 下,是否有 hubble-ui 这个 svc 。

(MoeLove) ➜ kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hubble-metrics ClusterIP None <none> 9091/TCP 4m31s

hubble-relay ClusterIP 10.102.90.19 <none> 80/TCP 4m31s

hubble-ui ClusterIP 10.96.69.234 <none> 80/TCP 4m31s

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 8m51s

直接使用 kubectl port-forward ,从本地来访问 Hubble UI 。

(MoeLove) ➜ ~ kubectl -n kube-system port-forward svc/hubble-ui 12000:80

Forwarding from 127.0.0.1:12000 -> 12000

Forwarding from [::1]:12000 -> 12000

浏览器中打开 http://127.0.0.1:12000 即可。

可以看到我们刚才部署的所有 Pod,以及查看到相应的 CiliumNetworkPolicy 等信息,这里就不赘述了,有兴趣的小伙伴可以自行探索下。

Hubble metrics 观测

我们也可以使用 Hubble 暴露出来的 metrics 进行观测:

(MoeLove) ➜ ~ kubectl port-forward -n kube-system ds/cilium 19091:9091

Forwarding from 127.0.0.1:19091 -> 9091

Forwarding from [::1]:19091 -> 9091

简单看下其中的内容,包含各类请求/响应/丢弃等相关的统计信息,还有包括每个目标端口包的数量统计等。感兴趣的小伙伴可以自行探索下。

(MoeLove) ➜ ~ curl -s localhost:19091/metrics | head -n 22

# HELP hubble_dns_queries_total Number of DNS queries observed

# TYPE hubble_dns_queries_total counter

hubble_dns_queries_total{ips_returned="0",qtypes="A",rcode=""} 1165

hubble_dns_queries_total{ips_returned="0",qtypes="AAAA",rcode=""} 1165

# HELP hubble_dns_response_types_total Number of DNS queries observed

# TYPE hubble_dns_response_types_total counter

hubble_dns_response_types_total{qtypes="A",type="A"} 233

hubble_dns_response_types_total{qtypes="AAAA",type="AAAA"} 233

# HELP hubble_dns_responses_total Number of DNS queries observed

# TYPE hubble_dns_responses_total counter

hubble_dns_responses_total{ips_returned="0",qtypes="A",rcode="Non-Existent Domain"} 932

hubble_dns_responses_total{ips_returned="0",qtypes="AAAA",rcode="Non-Existent Domain"} 932

hubble_dns_responses_total{ips_returned="1",qtypes="A",rcode="No Error"} 233

hubble_dns_responses_total{ips_returned="1",qtypes="AAAA",rcode="No Error"} 233

# HELP hubble_drop_total Number of drops

# TYPE hubble_drop_total counter

hubble_drop_total{protocol="ICMPv4",reason="Policy denied"} 459

hubble_drop_total{protocol="ICMPv4",reason="Unsupported protocol for NAT masquerade"} 731

hubble_drop_total{protocol="ICMPv6",reason="Unsupported L3 protocol"} 213

hubble_drop_total{protocol="TCP",reason="Policy denied"} 1425

hubble_drop_total{protocol="UDP",reason="Stale or unroutable IP"} 6

hubble_drop_total{protocol="Unknown flow",reason="Policy denied"} 1884

验证 CiliumNetworkPolicy 的效果

说了这么多,我们来验证下刚才部署的 CiliumNetworkPolicy 的实际效果吧。

以下是刚才部署的测试 Pod, 我们通过这些 Pod 来访问 echo-a 这个 svc 。

(MoeLove) ➜ ~ kubectl get pods

NAME READY STATUS RESTARTS AGE

echo-a-8b6595b89-w9kt2 1/1 Running 0 79m

pod-to-a-5567c85856-xsg5b 1/1 Running 0 79m

pod-to-a-allowed-cnp-7b85c8db8-jrjhx 1/1 Running 0 79m

pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm 1/1 Running 0 79m

pod-to-a这是未配置任何 CiliumNetworkPolicy 规则的 Pod

(MoeLove) ➜ ~ kubectl exec pod-to-a-5567c85856-xsg5b -- curl -sI --connect-timeout 5 echo-a

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Sat, 26 Oct 1985 08:15:00 GMT

ETag: W/"83d-7438674ba0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2109

Date: Thu, 03 Sep 2020 00:54:05 GMT

Connection: keep-alive

pod-to-a-allowed-cnp配置了允许通过TCP访问echo-a

(MoeLove) ➜ ~ kubectl exec pod-to-a-allowed-cnp-7b85c8db8-jrjhx -- curl -sI --connect-timeout 5 echo-a

HTTP/1.1 200 OK

X-Powered-By: Express

Vary: Origin, Accept-Encoding

Access-Control-Allow-Credentials: true

Accept-Ranges: bytes

Cache-Control: public, max-age=0

Last-Modified: Sat, 26 Oct 1985 08:15:00 GMT

ETag: W/"83d-7438674ba0"

Content-Type: text/html; charset=UTF-8

Content-Length: 2109

Date: Thu, 03 Sep 2020 01:10:27 GMT

Connection: keep-alive

pod-to-a-l3-denied-cnp则是只配置了允许访问 DNS,而未配置允许对echo-a的访问

(MoeLove) ➜ ~ kubectl exec pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm -- curl -sI --connect-timeout 5 echo-a

command terminated with exit code 28

可以看到,如果对 Pod 应用了 CiliumNetworkPolicy , 但是未配置对应的允许规则的话,则代表不允许访问。

比如,我们可以使用上面两个配置了 CiliumNetworkPolicy 的 Pod 来访问下公网域名:

(MoeLove) ➜ ~ kubectl exec pod-to-a-allowed-cnp-7b85c8db8-jrjhx -- curl -sI --connect-timeout 5 moelove.info

command terminated with exit code 28

(MoeLove) ➜ ~ kubectl exec pod-to-a-l3-denied-cnp-7f64d7b7c4-fsxrm -- curl -sI --connect-timeout 5 moelove.info

command terminated with exit code 28

可以看到,均不能正常访问。

总结

本节,主要介绍了 Cilium 和 Hubble 等。

通过使用 KIND 创建的 Kubernetes 集群,部署了 Cilium 及其相关组件,并通过一个实例,来展示了通过 hubble observe,Hubble UI 及 Hubble metrics 等方式进行观测。

也通过实际操作,验证了 CiliumNetworkPolicy 的实际效果。

我主要是在为 Docker 写代码的过程中,会涉及到 LSM 及 seccomp 等部分,所以顺便去研究了 eBPF 及其相关技术(后续再分享这部分内容)。

而 Cilium 则是我在 2019 年上半年开始学习和研究的,但正如我在去年的文章 《K8S 生态周报| cilium 1.6 发布 100% kube-proxy 的替代品》 中写的那样:

这里稍微说几句我关于 Cilium 的看法:

- 厉不厉害?厉害。

- 值不值得研究?值得。

- 会不会放到自己的集群替代 kube-proxy ?不会,最起码目前不会。

如果你想要通过 cilium 研究 eBPF 或者 XDP 我倒是建议你可以看看,是个很不错的项目,而且通过这个项目能加深很多网络方面的认识。这么说吧,如果把 cilium 的源码及所涉及原理都研究通透了,那就很厉害了。

至于要不要替换 kube-proxy 在我看来,最起码目前我不会这样去做。解决问题的办法有很多种,而替换掉一个核心组件,却不一定是一个最值得的选择。

Cilium 是一个值得学习和研究的项目/技术,但我目前尚未将它放到生产环境中(这也是我少数花费很多精力研究,但未应用于生产的技术之一)。

但现在看来, Cilium 也有了一定的市场/发展,是时候重新考量下了。后续我会继续分享 Cilium 及 eBPF 相关的技术文章,欢迎关注。